Distributed log collection for hadoop is intended for people who are responsible for moving datasets into hadoop in a timely and reliable manner like software engineers, database administrators, and data warehouse administrators Tutorial total size in mb material price :

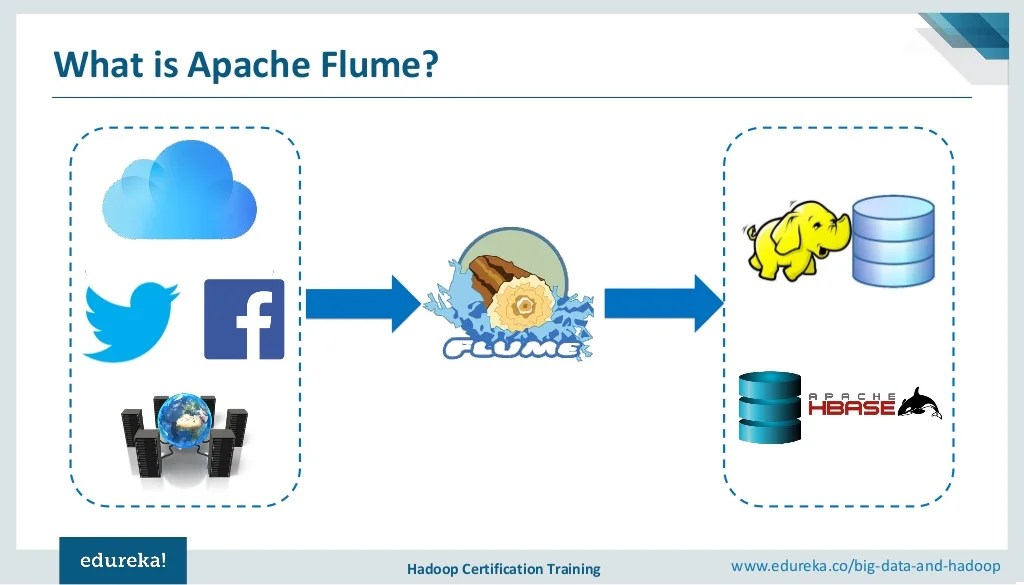

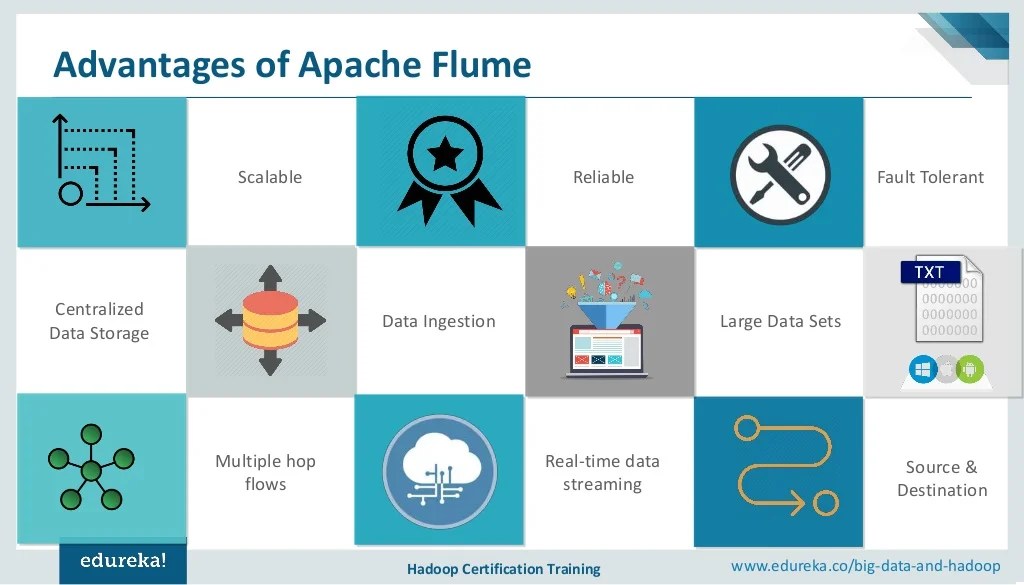

Apache Flume Book, Using flume is one of the books from the so called big data series. Construct a series of flume agents using the apache flume service to efficiently collect, aggregate, and move large amounts of event data; It has a simple and flexible architecture based on streaming data flows.

Learn about flume + apache kafka integration. Construct a series of flume agents using the apache flume service to efficiently collect, aggregate, and move large amounts of event data; About the tutorial flume is a standard, simple, robust, flexible, and extensible tool for data ingestion from various data producers (webservers) into hadoop. Audience this tutorial is meant for all those professionals who would like to learn.

Instant Apache Sqoop eBook

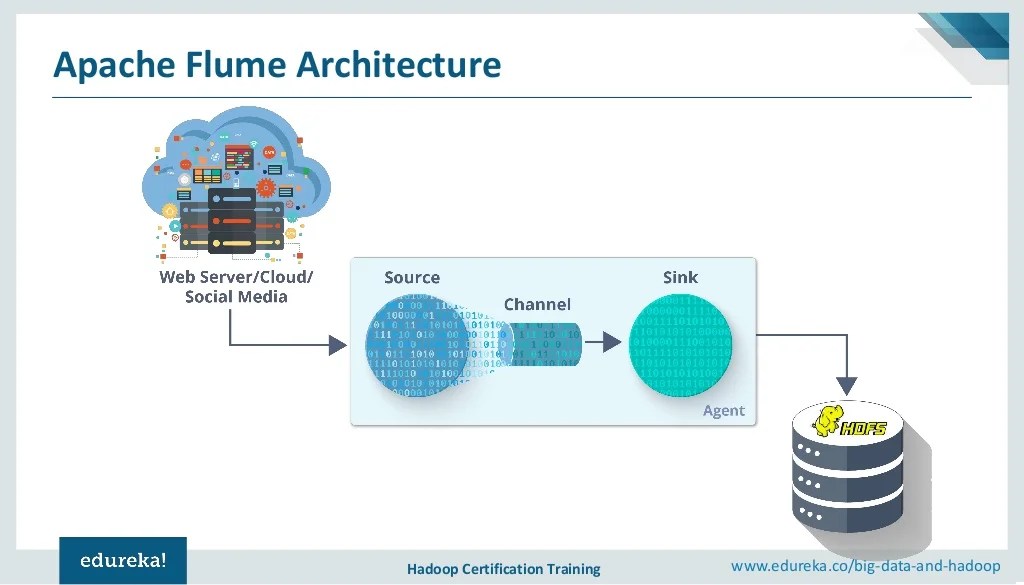

I couldn�t ask for a better friend daily by my side. Using apache flume we can store the data in to any of the centralized stores (hbase, hdfs). Distributed log collection for hadoop, packt publishing. Flume 1.6.0 user guide introduction overview apache flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store. Step 3 create a directory with the name flume in the same directory where the installation directories of hadoop , hbase , and other software were installed (if you have already installed any) as shown below. As we know, to efficiently collect, aggregate, and move large amounts of log data apache flume is a distributed, reliable, and available service.

Apache Flume Distributed Log Collection for Hadoop by, Flume is customizable and provides support for various sources and sinks like kafka, avro, spooling directory, thrift, etc. It is used to stream logs from application servers to hdfs for ad hoc analysis. Its main goal is to deliver data from applications to apache hadoop�s hdfs. With this complete reference guide, you’ll learn flume’s rich set of features for collecting,.

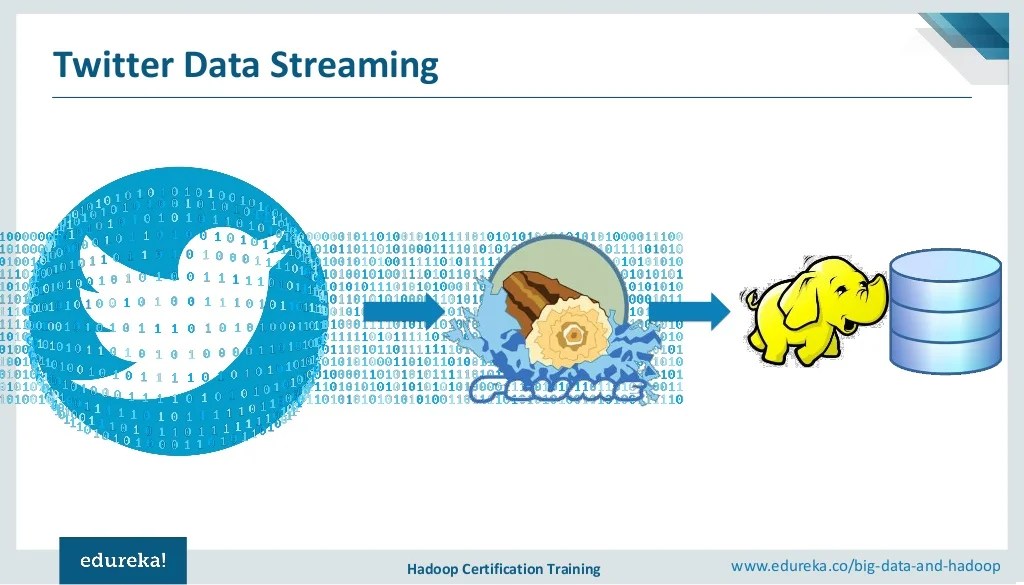

Apache Flume Tutorial Twitter Data Streaming Using Flume, Overview and architecture the problem with hdfs and streaming data/logs sources, channels, and sinks flume events interceptors, channel selectors, and sink processors 2. Audience this tutorial is meant for all those professionals who would like to learn. Distributed log collection for hadoop, packt publishing. This book starts with an architectural overview of flume and its logical components. It explores channels,.

4.Flume Apache Hadoop Scalability, A program called md5, md5sum, or shasum is included in many unix distributions for this purpose. Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can resolve these problems. Design and implement a series of flume agents to send streamed data into hadoop. In this tutorial, we will be using simple and illustrative example.

Apache Flume Tutorial Twitter Data Streaming Using Flume, Learn about flume + apache kafka integration. Apache flume tutorial (videos and books) tutorial name: In this chapter, an overview of apache flume and its architectural components with workflow is discussed. This is the first update to steve�s first book, apache flume: I�d again like to dedicate this updated book to my loving and supportive wife, tracy.

Apache Hadoop ( Big Data ) Blackbook (eBook) eBook, In this tutorial, we will be using simple and illustrative example to explain the basics of apache flume and how to use it in practice. Flume quick start flume configuration file overview starting up with hello world 3. Its main goal is to deliver data from applications to apache hadoop�s hdfs. It has a simple and flexible architecture based on.

Pin by Tomcy John on Programming Books, Enterprise, Lake, She puts up with a lot, and that is very much appreciated. As we know, to efficiently collect, aggregate, and move large amounts of log data apache flume is a distributed, reliable, and available service. It has different transactions from source to channel and from channel to source. Step 3 create a directory with the name flume in the same.

Read Apache Flume Distributed Log Collection for Hadoop, Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can resolve these problems. Its main goal is to deliver data from applications to apache hadoop�s hdfs. With this complete reference guide, you’ll learn flume’s rich set of features for collecting, aggregating, and writing large amounts of streaming data to the hadoop distributed file system.

Apache Flume Tutorial Twitter Data Streaming Using Flume, Using flume is one of the books from the so called big data series. Apache flume videos and books online sharing: I couldn�t ask for a better friend daily by my side. Flume 1.6.0 user guide introduction overview apache flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many.

Apache Flume Tutorial Twitter Data Streaming Using Flume, In this chapter, an overview of apache flume and. Apache flume is a distributed, reliable, and available service used to efficiently collect, aggregate, and move large amounts of log data. Apache flume videos and books online sharing: With this complete reference guide, you’ll learn flume’s rich set of features for collecting, aggregating, and writing large amounts of streaming data to.

Apache Flume Tutorial Twitter Data Streaming Using Flume, About the tutorial flume is a standard, simple, robust, flexible, and extensible tool for data ingestion from various data producers (webservers) into hadoop. Distributed log collection for hadoop. With this complete reference guide, you’ll learn flume’s rich set of features for collecting, aggregating, and writing large amounts of streaming data to the hadoop distributed file system (hdfs), apache hbase, solrcloud,.

Apache Spark Graph Processing by Ramamonjison Rindra, I couldn�t ask for a better friend daily by my side. Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can resolve these problems. Distributed log collection for hadoop is intended for people who are responsible for moving datasets into hadoop in a timely and reliable manner like software engineers, database administrators, and data.

Using Kudu with Apache Spark and Apache Flume O�Reilly Media, When the rate of incoming data exceeds the rate at which data can be written to the destination, flume acts as a mediator between data producers and the centralized stores and provides a steady flow of data between them. It is used to stream logs from application servers to hdfs for ad hoc analysis. Distributed log collection for hadoop covers.

Apache Flume Tutorial Twitter Data Streaming Using Flume, It is used to stream logs from application servers to hdfs for ad hoc analysis. This is the first update to steve�s first book, apache flume: Using apache flume we can store the data in to any of the centralized stores (hbase, hdfs). Flume is customizable and provides support for various sources and sinks like kafka, avro, spooling directory, thrift,.

Apache Flume Tutorial Twitter Data Streaming Using Flume, When the rate of incoming data exceeds the rate at which data can be written to the destination, flume acts as a mediator between data producers and the centralized stores and provides a steady flow of data between them. Flume is customizable and provides support for various sources and sinks like kafka, avro, spooling directory, thrift, etc. It is used.

Apache Flume Data Lake for Enterprises Book, Using flume is one of the books from the so called big data series. This book starts with an architectural overview of flume and its logical components. Alternatively, you can verify the md5 or sha1 signatures of the files. Configure failover paths and load balancing to remove single points of failure This book starts with an architectural overview of flume.

apache flume book Apache Hadoop Web Server, The use of apache flume is not only restricted to log data aggregation. This book is ideal for programmers looking to analyze datasets of any size, and for administrators who want to set up and run hadoop clusters. Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can resolve these problems. In this tutorial,.

Instant Apache Sqoop eBook, In this chapter, an overview of apache flume and. This is the first update to steve�s first book, apache flume: Read the using flume book. In this chapter, an overview of apache flume and its architectural components with workflow is discussed. Design and implement a series of flume agents to send streamed data into hadoop.

Apache Flume Data Lake for Enterprises Book, In this chapter, an overview of apache flume and its architectural components with workflow is discussed. It has different transactions from source to channel and from channel to source. A program called md5, md5sum, or shasum is included in many unix distributions for this purpose. About the tutorial flume is a standard, simple, robust, flexible, and extensible tool for data.

Apache Flume Tutorial Twitter Data Streaming Using Flume, How can you get your data from frontend servers to hadoop in near real time? In this tutorial, we will be using simple and illustrative example to explain the basics of apache flume and how to use it in practice. She puts up with a lot, and that is very much appreciated. Distributed log collection for hadoop. Overview and architecture.

Apache Flume Tutorial Twitter Data Streaming Using Flume, A program called md5, md5sum, or shasum is included in many unix distributions for this purpose. Construct a series of flume agents using the apache flume service to efficiently collect, aggregate, and move large amounts of event data; Flume is a standard, simple, robust, flexible, and extensible tool for data ingestion from various data producers (webservers) into hadoop. Configure failover.

Apache Flume Tutorial Twitter Data Streaming Using Flume, It explores channels, sinks, and sink. Apache flume is a distributed, reliable, and available service used to efficiently collect, aggregate, and move large amounts of log data. Apache flume tutorial (videos and books) tutorial name: Audience this tutorial is meant for all those professionals who would like to learn. In this chapter, an overview of apache flume and.

Apache flume distributed log collection for hadoop pdf, It has different transactions from source to channel and from channel to source. It explores channels, sinks, and sink. Configure failover paths and load balancing to remove single points of failure It explores channels, sinks, and sink. Flume quick start flume configuration file overview starting up with hello world 3.

Apache Flume architecture Modern Big Data Processing, Apache flume 1.9.0 is signed by ferenc szabo 79e8e648. Flume 1.6.0 user guide introduction overview apache flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store. Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can.

Apache Flume Tutorial Twitter Data Streaming Using Flume, Flume quick start flume configuration file overview starting up with hello world 3. Apache flume is a distributed, reliable, and available service used to efficiently collect, aggregate, and move large amounts of log data. In this tutorial, we will be using simple and illustrative example to explain the basics of apache flume and how to use it. Configure failover paths.

Download Using Kudu with Apache Spark and Apache Flume, Read the using flume book. Distributed log collection for hadoop covers problems with hdfs and streaming data/logs, and how flume can resolve these problems. It is used to stream logs from application servers to hdfs for ad hoc analysis. Apache flume data will be shared through google drive in one folder. With this complete reference guide, you’ll learn flume’s rich.